dpi-checkers

RU :: TCP 16-20 DWC (domain whitelist checker)

Allows to find out whitelisted items on DPIs where TCP 16-20 blocking method is applied.

This kind of information can be interesting in its own right as well as useful for bypassing limitations.

⚠️ The whitelist means, among other things, that websites (more specifically, domains and subdomains) on this list are loaded without TCP 16-20 blocking method limitations (a censor is based on SNI in the TLS handshake and possibly an HTTP Host header if plain HTTP is used).

Ready-to-use results

Not everyone will want to run this script on their own (especially because it can run for quite a long time, and because its implementation is naive and uses a bruteforce method). That's why this work has already been done by the committers of this repository. The top-10k popular domains based on the list from OpenDNS (unfortunately, this file was last updated 2014-11-06, but it's still generally up to date) was used as a input list.

Last Updated: 2025-07-02

Last File: /ru/tcp-16-20_dwc/results/based_on_opendns_2025-07-02.txt

Latest stats: 266 domains out of 10'000 (2.66%) are whitelisted

File Format: .csv/.md table with | Domain | Provider | Country | header

⚠️ upd.: We found that whitelists can vary significantly between operators. Nevertheless, on average there are a small number of intersecting results. Thus, you can analyze (using the methodology described here) the necessary operators, find the intersection of the results, and use them as needed.

Notes

As far as we know, the whitelist is created using the *.domain.com:* scheme. Thus, you can (and should?) use subdomains of the found domains (if site.com works, then foo.site.com and foo.bar.site.com will also work).

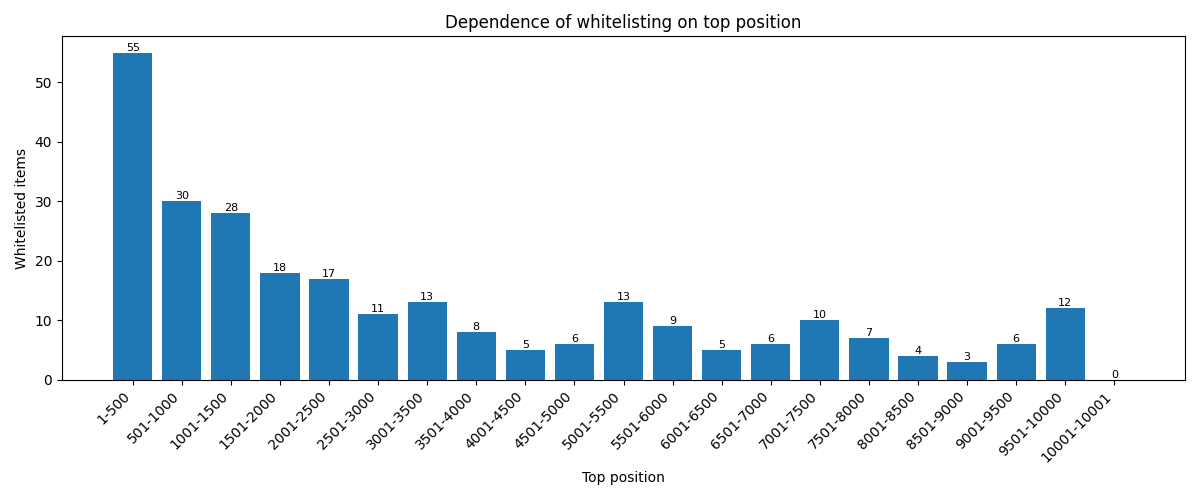

We also bring to your attention a graph that shows the dependence of being on the whitelist on the place in the top (provided by OpenDNS).

It can be seen that there is a correlation between these properties (which is generally logical).

It can be seen that there is a correlation between these properties (which is generally logical).

Self-running the script

-

First of all, you have to get an input list with domains to test (the script doesn't get the full whitelist, it just checks your input list to see if each of its elements is included in the DPI whitelist). You can use OpenDNS lists as a starting point (see above), or you can use Cloudflare Radar, for example;

-

You will need a remote server in “suspicious” networks (i.e. those limited by TCP 16-20 blocking method at your “home” ISP). There, you would need to install a web server with https (a self-signed certificate [openssl/etc] is fine, since the script ignores validation) that would respond the same regardless of the SNI passed. It should also send a file of at least 128KB (over the network, including compression) to some path — GET request.

As such a server you can use nginx with approximately the following configuration:server { listen 443 ssl default_server; ssl_certificate /path/to/cert.crt; ssl_certificate_key /path/to/cert.key; root /var/www/html; location / { try_files $uri $uri/ =404; } }A static file can be generated like this (in this case, 1MB in size):

dd if=/dev/urandom of=/var/www/html/1MB.bin bs=1M count=1* Don't forget to open https (443) port.

-

Finally, on your local machine (must have Python 3 and the

curlutility installed) with internet access through an ISP with DPI using the TCP 16-20 blocking method, you can run the script. It is recommended to use a POSIX-compatible OS (Linux, macOS, etc). The script has the following parameters:Parametr Default Required Desc -iin.txt No Path to the file with the list of domains to check. -oout.txt No The path to the results file. The domains that are included in the whitelist will be saved. -eerr.txt No Error file path. -u— Yes The path for the URL where the static file is located. -d— Yes IP of your destination server from the previous step. -t5No Connection/read timeout in seconds. -r65535No Upper bound of the range of bytes to be downloaded. Example of a run:

python domain_whitelist_checker.py -u /1MB.bin -d 1.2.3.4

The script is single-threaded, but you can parallelize it via e.g. GNU parallel utility. Also you can run the result file through this script to find out the likely ISPs the domain owners are using, as well as the country.

Contributing

We would be happy if you could help us improve our checkers through PR or by creating issues. Also you can star the repository so you don't lose the checkers. The repository is available here.